11th Oct, 2022

Intro

This post has a few key objectives. Firstly, I wanted to share a bit of background as to why I am interested in web performance establish my bona fides, so to speak. Secondly, I wanted to show that it is possible to create really fast user experiences, there is no magic involved, it just takes commitment over a period of time.

My third objective is a wider point. Look at all the steps we had to take at Schuh to make the website fast. Yes, it is great that we took the steps and became fast. But we should never have had to take them in the first place. I am not pointing the finger of blame at the Schuh development team, as this issue is almost all-pervasive on any reasonably large website. We have allowed websites to get overly complex, not to the benefit of the user experience but to its detriment. All these websites have been built for the user, that user experience is based on what is sent to the browser (HTML/CSS/JS) and it should be to the great shame of most development teams that they have given almost zero regard to the quality of that code sent to the browser.

If we are truly to deliver great experiences, than we must care about every byte sent to the browser. Sub-standard HTML is the equivalent of a dusty shelf in a luxury fashion store, it should just not be tolerated.

An Optimiser at Heart

In one way or another, I have been interested in making websites faster for over 10 years. However, making efficient systems efficient is something I have been at a lot longer; in fact 20 years longer. I may never have been a full-time web performance engineer, but I get web performance!

I first started programming when I was 11, on a ZX Spectrum 128k+ computer, it had 128k of RAM, which isn't very much! I wrote hundreds of programs, from games to utilities. With such limited computing power, you learned to be resourceful. In computing class at school, we used BBC Micros, again, very limited computing power. For the final class programming assessment we were given a brief. As the most experienced programmer in the class, I went overboard. I wrote a program that would do implement all the features required for the assessment, and then added a whole load of additional features. I even went as far as adding some scrolling credits at the end. On submission day, it dawned on me that what I had written wasn't actually very good. Yes, it did the job. But it wasn't neat, it wasn't efficient, it was over-complicated. So, with not much time to go, I re-wrote the whole thing using absolutely as efficient code as possible, but still including comments. I submitted and passed with flying colours.

Moving on to university, I had a programming course to do, it was programming for non-computer science students

, there were around 50 in the class.

We had a final assignment to write a program simulating a random walk, halting the program when every square had been walked. The agent

could be moved

one step at a time, to one of 8 positions around the current position (think of a queen in chess, but she can only move 1 square).

The obvious solution was to generate a random number between 1 and 8, for these positions. That's what everyone else did. I generated 2 random numbers,

either 1 or 0, Cartesian coordinates. While it might sound simpler to just generate 1 random number instead of 2, my algorithmic choice made the rest of the

program execution considerably easier. Easier to write, easier to understand, but also to adapt, if for example the agent could suddenly move by 2 squares.

It turned out that my algorithmic choice was also the choice made by the tutor.

It is in my nature to optimise, to make efficient, to improve.

Before page speed, there was server speed

Some 12 years ago, when I was a senior developer at Allsaints, I was responsible for BAU. Business As Usual; working with a team of developers to fix bugs tweak existing features and ensure the site ran smoothly and was stable. As part of that, I looked after the server infrastructure. I followed on from my friend and mentor (although I called him my minotaur), Danny Angus. I learned a lot from Danny.

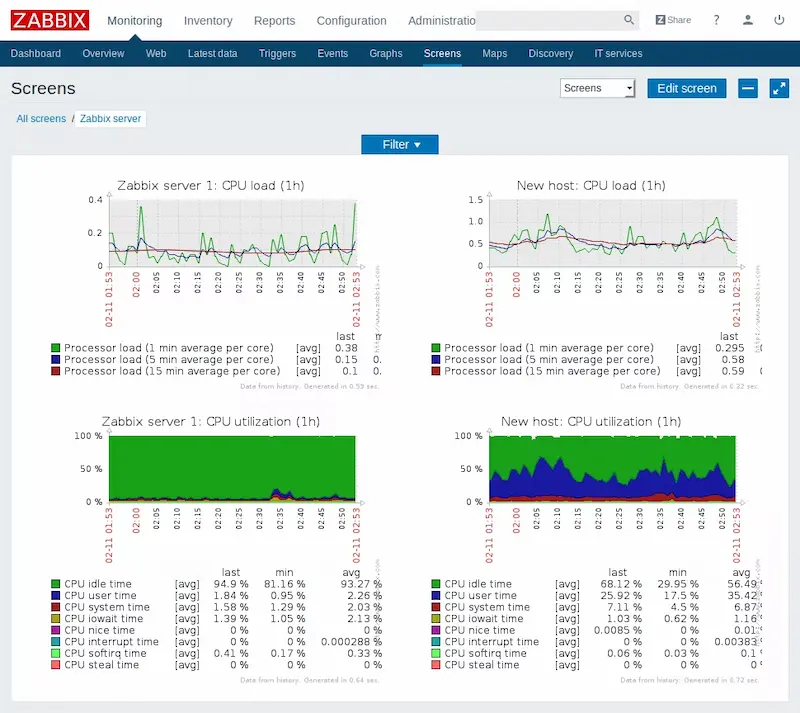

Part of keeping the infrastructure working was to ensure that it coped with high server loads during peak trade. We ran Zabbix, an open-source monitoring tool, which had lots of cool graphs.

This was a new thing to obsess over! How could I make the site run with as little server resource as possible? Also, one of the graphs was

server response time

, so I could see that the two were interlinked. If the servers were working hard, response times slowed down. But the opposite

was also true, if I could get the servers to respond quicker, they could handle the load better.

At this stage, I wasn't really thinking about how fast the page was loading, as long as it was just stuck on a white screen for ages.

It was quite a buzz when the site went on sale and transactions went up 20x overnight and I could watch the servers hardly break a sweat.

It wasn't uncommon back then for server response time to be the metric of choice for measuring web page speed. But the web was starting to care more about speed.

Moving Focus Forward

It didn't take me long to start to move my shift more towards the client-side and look at the overall page load times. I added the Yslow extension for Firebug, created by one of the Godfathers of web performance, Steve Souders. Yslow was the forefather of Chrome's Lighthouse, both invaluable tools for auditing the performance issues on a website. I combined that with reading Steve's authoritative book on the subject Even Faster Websites, which is still relevant today, 13 years after it was published.

Allsaints is where I first met my friend Andy Davies, we used a monitoring tool called Site Confidence, later to become NCC Group and then much later bought by Eggplant. Andy has been a source of inspiration and guidance for years. He has always offered advice when I needed it, and then hopefully I took that advice forward and produced a working example of what can be achieved if given sufficient focus over a long period of time.

Unfortunately my time with Allsaints was drawing to a close, I had decided to move back to Scotland, to the land and people I love. However, before I left I implemented as much as I could to make the site faster, the load time was 3.3s, although looking back I was measuring the wrong thing back then. However, I was on the right track, as I had also measured the onLoad event, which was taking 2.3s. Not blazing fast, but pretty good for the time, 10½ years ago.

A Chance to push harder

In August 2012, I relocated back to Scotland and joined Schuh as Deputy Head of Ecommerce. But I should start my story by thanking my boss at the time, Sean McKee, who gave me a lot of support to go after this. Without his support I would not have been able to push a performance agenda, or indeed my whole optimisation agenda, including the introduction of a tean of conversion rate optimisation analysts.

Even before I had started, I had been asked to provide a plan of how the website could be made quicker. The path was laid. Looking back at that plan, it was a bit rudimentary, however it was a start. The biggest issue was all the third parties and external scripts on the site. This is still the case on many sites today.

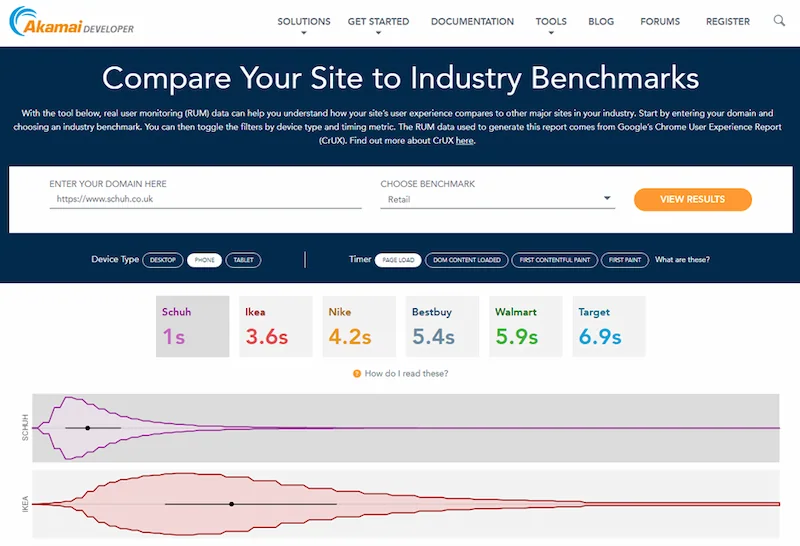

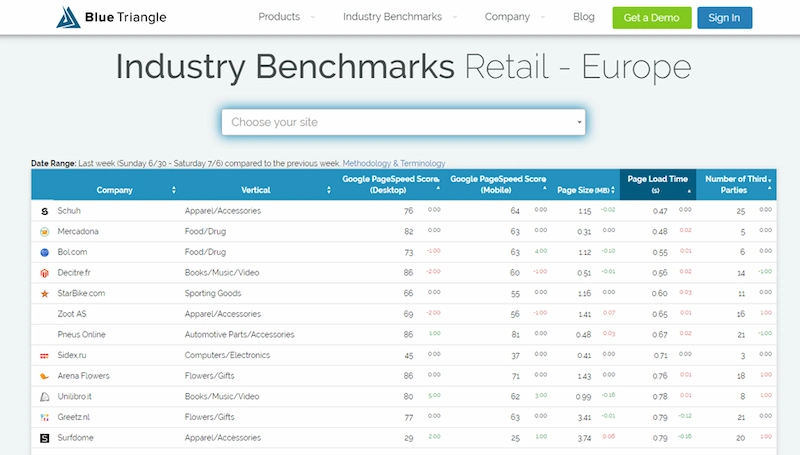

Over the seven years I was at Schuh, we worked hard to make the site faster and faster. When I started, the site loaded in in 5.8s and when I left it was

loading in less than 1s, on a site with around 5M impressions per week. We became the fastest ecommerce website in Europe, and were the benchmark

fast website

that many others compared themselves to.

We saw continual improvement in the conversion rate over the years, particularly on mobile, where page speed is one of the most critical factors for success. The conversion rate improvement wasn't just down to page speed improvements, we were also making a lot of other changes and conducting a lot of A/B tests, however it was a significant factor.

Faster websites are also good for your SEO ranking, you may know that it is part of the Google Algorithm. Also part of that algorithm is the Core Web Vitals

suite of factors, one of which is cumulative layout shift

, this is when the page jumps about as various elements load, it was introduced in February 2021. It

was actually something we were already optimising for at Schuh, I remember doing a podcast with James Gurd

in February 2020, where he asked me What is next in site-speed? What will we need to look at next?

my answer was layout shift

. I don't make a habit

of predicting what Google will care about next, but I just happened to get that one right!

One of the biggest bits of validation I had for all the work we were doing on the mobile web experience at Schuh (including page speed), was when we were conducting

some moderated user testing and one of the subjects asked Is this an App?

We had a website that felt so slick that it felt like an installed app!

Success by a thousand cuts

Measurement

One of the most important parts of the was building site speed metrics into our weekly KPI reporting, which was shared across the business. We recognised that a slow website made for a poor user experience, and the data showed that it was detrimental to the conversion rate. One piece of work I did was to add some custom timing on to the site which measured when the page started to show on the screen. I could then see what correlation there was between this timing and the conversion rate on the site. The data indicated that if we could speed up this initial display of the page, conversion rate would go up by around 7%.

Programming, People and Processes

There were many technical changes to the site (listed below), but one of the biggest factors are the people and processes. Reporting is part of those processes, but before that it is about setting expectations on what is and isn't acceptable on the site. Overly large images can seriously slow down page load, so limits were set on file sizes allowed. It was then part of the process to produce amazing images within those file size constraints. That comes down to training about what decisions can be made which impact image sizes, for example, don't embed text in images, it doesn't compress well.

Site changes

There were many changes, big and small, which contributed to making the Schuh website very fast. Website performance is determined by 3 main areas:

- The Server.

- The Network.

- The Client.

All of the changes we made were to improve one of those areas, and so I have organised the changes by area, not by chronological order or impact.

The Server

I have talked above about my time at Allsaints and improving the server response time, but when I moved to Schuh this was actually the area of performance

I had least input into. We hadn't implemented the

Server-Timing header, so I had no visibility of what was happening on the back end. However, the dev team had worked had to refactor the

code base as part of an overhaul of the site to move from sites-per-device to responsive, and generally the HTML was being returned in about half a second.

The Network

- Deferring 3rd party scripts. This was one of the first things we did, moving as many Javascript calls to the bottom of the page. When I first joined Schuh, third party scripts were almost doubling the page load time.

- GTM second container. Following on from trying to defer as much Javascript as possible, we added a second Google Tag Manager tag to the page, this was triggered was the page was loaded and it then called a lot of the third party scripts we used. The second container gave us a lot more control.

- Eliminate redirects. When we first started improving the speed, there were many pages which redirected to other URL's, sometimes over multiple hops. Each redirect results in an additional HTTP request.

- HTTP/2. I pushed for the entire site to be HTTPS, partly because I wanted to use the latest version of the HTTP protocol HTTP/2, which has a lot of performance optimisations baked in.

- Self-hosting jQuery (and similar). There were a number of scripts hosted on 3rd party domains, for example jQuery. In theory this is done so that if the client has already visited a website which uses this script, it doesn't need to download it again. However, this never actually happens in practice, and all that happens is that you need to open up another HTTP connection, which takes additional time. Server it from your own domain and save the connection.

- Preconnect to Cloudfront. We used Cloudfront as our CDN, therefore connecting out to Cloudfront was on the critical path so we needed to make that as quick as possible. We added a HTTP header to tell the browser to preconnect to Cloudfront, so that it could initiate that before the HTML response was received.

- HSTS. A small win, but HSTS tells the browser that the website will always be served via HTTPS, so it can speed up requests where the initial request was for an HTTP page.

- Eliminate EV Cert & OCSP stapling. Schuh used an Extended Validation SSL certificate, these are a fancier than your bog-standard SSL but offer no real

benefit; they have recently been made redundant. However, by using an EV certificate, it stopped us from being able to use

OCSP Stapling, which is a technique to reduce the number of connections associated with the SSL connection. Schuh moved away from the EV cert not long after I left. - You host where? We ran a trial of a service called Aspectiva (now owned by Walmart), we noticed that they were causing a lot lag on the page load.

The development team reported that

the widget is slow to respond

, and indeed it was. However when I investigated deeper, and ran a trace route (something the Schuh dev team had no experience of), I could see that it was taking 17 hops to reach their server. More importantly, those hops made their was across the Atlantic, all the way to the West Coast of the US. On reporting this to Aspectiva, they said they couldn't replicate the issue; however coincidentally a few days later they moved their hosting to the cloud and response times improved.

The Client

- Eliminate or defer all in-line JS, move scripts to bottom. When I first joined Schuh, the pages were bloated with Javascript (and

<style>), most of which was either not required at all or not required for the initial page load. Almost all this Javascript was either removed and moved to an external script and called at the bottom of the page, once the page had loaded. - Move on-page

<style>blocks. As above, CSS was moved to external scripts, which meant it could be cached between pages. It also meant that in many cases it could be eliminated. - Fonts. I am no lover of external fonts for their own sake, I'm not sure in many cases that they improve the user experience, or indeed are noticeable

to the users. However, I recognise that they are considered an important part of branding. We experimented with the best way of loading fonts, settling on

font-display: swap;. - Image formats. We introduced the

<picture>element for responsive images, although when I was there we hadn't tried using newer image formats such aswebp. We used an increasing number of SVG's to replace icon fonts, reducing byte count and usually increasing quality. - Using a placeholder for a slow third party. The on-site chat provider's code was fairly slow to serve, so what would happen is that the page would load and then their widget would appear in quite a jarring fashion. We replaced this with some progressive enhancement, which on-load took up the same space as the 3rd party widget, but was a link to a static help page. Then the 3rd party widget loaded over the top of this placeholder.

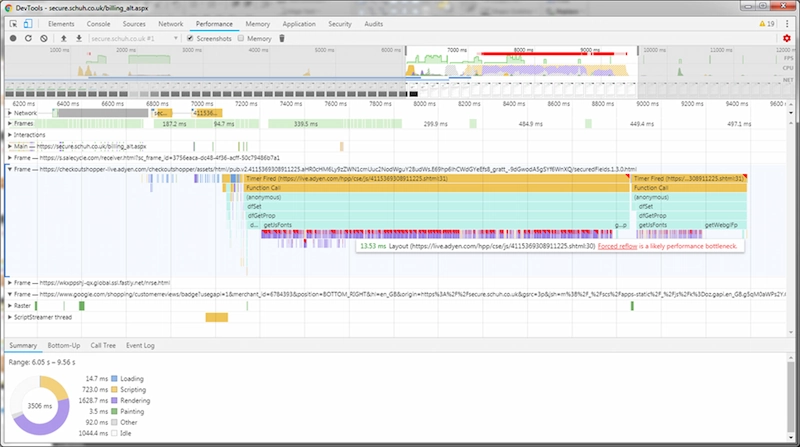

- Adyen font sniffing. Adyen, the payment platform, have a range of checkout solutions. We were a new client and rolled out a new checkout with them,

as part of the acceptance testing, it was observed that

…the payment form takes a few seconds to appear (normal behaviour), but it only partially loads (although perhaps looks fully loaded). It takes another 2 or 3 seconds to fully load. The form fields can't be interacted with until it has 'fully loaded'.

andScrolling the form fields in to view is slow and janky

. I had a look at it, and sure enough the browser was hanging. Profiling it in Chrome Dev tools showed that the browser was executing a slow script. It wasn't the Javascript itself that was slow, but it was interacting with the page which was very slow. The script was part of a fraud prevention tool, which finger printed the browser by looking at the unique installation profile, including which custom fonts were installed. The script added a bit of text to the screen, tried to apply a font from a long list, if the font was installed, the size of the text would change. It then moved on to the next font. Every time it measured the length of the bit of text, the browser had to layout the screen, which takes some effort, which can really add up over a few hundred fonts. I suggested to Adyen that they move this to a<canvas>element, which would mean the browser does not need to layout the whole screen, eliminating the slow execution without changing the output of the script. Adyen were delighted to work with us on this, their own team hadn't found the issue, they made the change and it was high-fives all around.

- Use newer CSS instead of JS for layout. One example of this was the homepage banners, which had text overlays as calls-to-action. Historically, they were positioned by Javascript after the page had loaded. This caused the user experience to jump about a bit after the initial fast page load. By doing the same calculation that the Javascript did in CSS, the problem was eliminated at the text displayed in the correct location from the start. This was an example of where staying on top of current CSS innovations really pays off, I was able to feed this in to the dev team.

- PWA. We were doing PWA's before they were cool. We first started looking at them in 2018. Allowing the site to be installed easily on a user's phone and making the site more tolerant to spotty network conditions, combined with the overall fast page speed moved the mobile experience further towards a native app experience without the development overheads and management complexity of having a separate app.

It was really satisfying to see these improvements going live at Schuh, it was a real team effort and I hope when the team looks back they may have forgiven me for sounding like a broken record about page speed!

Some closing thoughts

Too many websites are excessively complex. In principle, you should be able to use your website with CSS and JS turned off. If you can make your site work like that it will be better for performance, accessibility and SEO, all of which are great for improving revenue. A simpler site is also easier (cheaper) to maintain, as complexity adds brittleness.

Developers: I don't think you need that much Javascript to make the website work as intended. Care to change my mind?

That's not to say your website can't look beautiful. The look is a factor of the CSS (maybe with some progressively enhanced Javascript on top). But build that beauty on top of a solid foundation.

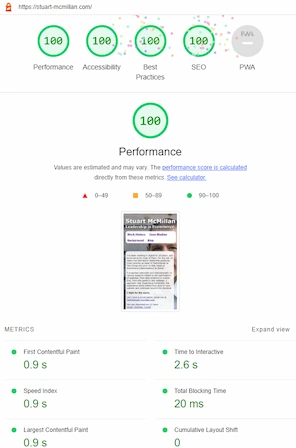

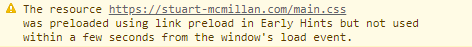

I have tried to build this website as well as possible, you can read about it here: all about this website. I have continued to

keep my knowledge up-to-date on web performance, for example on this site, I have rolled out HTTP 103 - Early Hints, which currently only works

in Chrome, I have had to add some conditional logic on my server to only send it for Chrome user agents as it was causing an issue with Safari.

If you think I have missed something to make it even faster, please let me know!

If you want any advice on how to make your website faster, I would be happy to help, please get in touch.